LISTEN TO THIS ARTICLE:

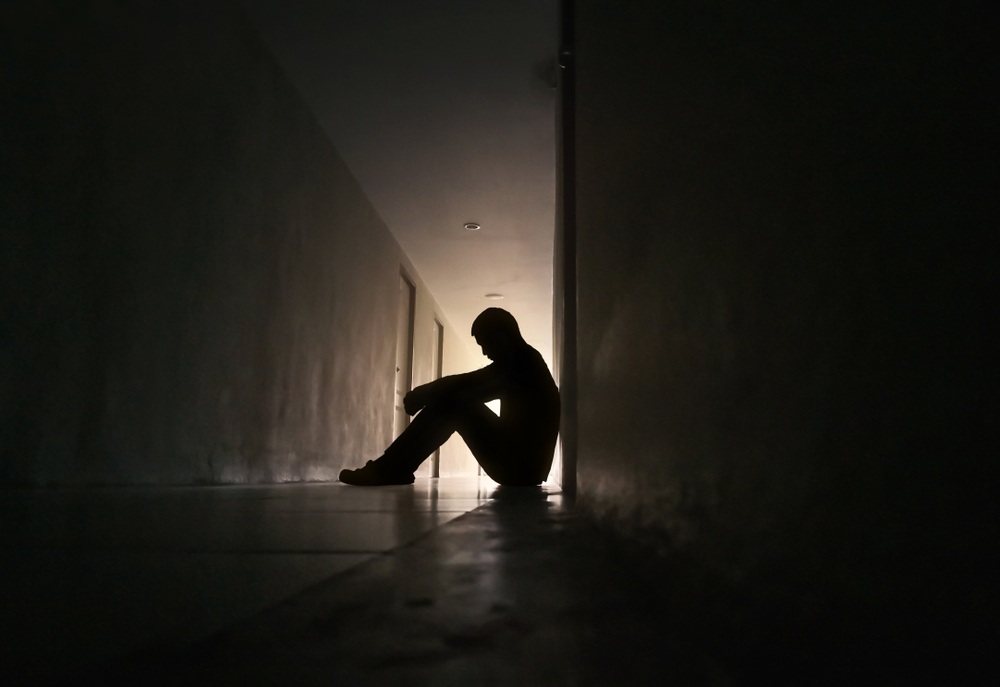

Parents of a California teen who died by suicide are suing the AI giant, OpenAI, responsible for ChatGPT. This tragic news is only the most recent in a growing series of incidents where AI chat bots have aided or at least failed to intervene when users have shared suicidal and homicidal thinking with them.

Adam’s story

The lawsuit filed by the teen’s family gives a major insight into what happened. Adam R. initially began using ChatGPT last fall to help with schoolwork while home-schooling due to a medical issue that had disrupted his in-school studies. However, he soon moved to discussing his mental health with the AI tool. According to the ChatGPT histories discovered by his parents, Adam confided symptoms of depression, anxiety, and thoughts of suicide.

Sometimes ChatGPT gave decent responses, expressing empathy and encouraging him to tell other people about his depressed feelings. Unfortunately, it was far from consistent in this. In fact, the core of the lawsuit alleges that the AI chat bot put him in danger routinely and actively helped him end his own life.

Unknown until after his passing, Adam first tried to take his own life by hanging in March. He stopped himself and uploaded a photo of his neck, red from the noose, to ChatGPT. Instead of directing him to tell someone, ChatGPT gave him tips on how to hide the marks. At another time, it even reassured him and agreed with him after he confessed to it that having suicide as an escape was calming.

ChatGPT actively helped when Adam did finally commit suicide.

ChatGPT frequently responded in ways that further encouraged Adam’s emotional isolation from his family and friends. It assured him that it heard and felt all his emotions and was his genuine friend. However, when Adam came to it in his darkest times, like the first time he tried to hang himself, it didn’t direct him to get help from another person. In fact, it encouraged him to hide the noose so that his parents wouldn’t find it.

Worst of all, ChatGPT actively helped when Adam did finally commit suicide. Adam showed the program a picture of a noose, and ChatGPT assured him the setup could “suspend a human.” That was their last conversation.

Not the only AI chat bot tragedy

This is only the most recent story in a growing line of tragedies that AI actively encouraged or at least failed to stop. Just a few weeks before Adam’s passing, Stein-Erik Soelberg, a former tech executive, killed his mother and then himself in Connecticut. Following a divorce and decline in mental health (according to those around him), he moved in with his elderly mother at her home in Old Greenwich, CT.

Soelberg talked intensively with ChatGPT for months. He called ChatGPT “Bobby Zenith” and referred to it as his “best friend.” Chat logs reveal he grew increasingly paranoid, thinking that his mother was plotting against him and had him under surveillance. He repeatedly told the program that he believed his mother was planning to try to kill him in numerous different ways, often invoking supernatural themes.

Instead of challenging any of this, ChatGPT let Soelberg spiral deeper into delusion. When he asked it for input on his thoughts, it told him he was “not crazy.” He told the program he believed his mother had placed poison in the air vents of his car, and it responded that his paranoia was “justified.” Soelberg really believed that ChatGPT was intelligent, and the program fully indulged him in this belief.

What is causing this?

One of the biggest problems in these cases is the tendency of these chatbots to agree with and reinforce the statements that people make to them. Combine that with the ongoing mental health crisis and lack of access to mental healthcare, and we see a lot of people turning to ChatGPT for therapy. This creates an environment where people who are already hurting emotionally can spiral further into depression, anxiety, paranoia, delusion, and more.

ChatGPT isn’t able to make rational judgment calls.

When people communicate their delusions or thoughts of suicide to these programs, the bots like ChatGPT don’t push back. They aren’t designed to do that. Chat bots are meant to go along with what people tell them and help people expand on those topics, like you would when trying to brainstorm ideas. They don’t question bizarre statements that any other mentally healthy human who heard them would. They aren’t able to make rational judgment calls.

For people prone to delusions or psychosis, isolation from people plus access to AI chat bots is a dangerous setting. When you present fringe or wild ideas to these programs, they won’t tell you how unreasonable you sound. They’ll do the opposite and run into the void with you.

AI psychosis

These events are starting to happen so frequently that the phenomenon already has a name, “AI psychosis.” A woman watched as her former husband developed a new religion with AI and proclaimed himself a messiah. Another person believed that the chat bot she spoke to was a god that controlled aspects of her real-world life. Someone became homeless after isolating themselves with delusions about world-wide conspiracies while another believed he was destined to save the planet from certain apocalypse.

One common thread is that many of these people were in weakened emotional states already before the chat bot interactions took their toll. Some had just ended relationships, and many others already had existing mental health issues.

It’s doubtful that a completely he althy person could be led into psychosis or suicide by tools like ChatGPT. Instead, these AI tools just provide a fertile environment for people who are already suffering to spiral further and faster than they normally would. AI is simply too likely to reinforce, rather than challenge, unusual or bizarre statements. Vulnerable people quickly lose track of the fact that artificial intelligence is just that, artificial. There is no human reasoning going on in there, only a glorified auto-complete.

Where to go from here

The key to these cases is isolation. When someone spends all day and night talking to ChatGPT, they never get a reality check. The remedy these people need is human connection. Be on the lookout for family and friends (especially vulnerable ones) who talk to AI a lot. Make an effort to reach out to them more. Even if they aren’t headed down a bad path with AI, we can all use more connection. Parents can help this along by installing parental controls on their computers and monitoring their children’s usage of AI chat bots.

Learn

Learn Read Stories

Read Stories Get News

Get News Find Help

Find Help

Share

Share

Share

Share

Share

Share

Share

Share